Revealed: Inside AI's Financial Brain - The Investment Data Training Generative AI

It's more expert than you or I ever will be, with caveats...

How many years did it take you to learn financial theory, interpret SEC filings, forecast earnings, analyze financial statements, build valuation models, run portfolio back-tests, or understand capital markets strategy? For me, it’s been thirty years and I’m still learning.

And yet, in just the last three years, generative AI models have gone from toddlers to experts in investment analysis. Their ability to internalize complex frameworks, recall them instantly, and apply them consistently is remarkable. Unlike me, who can’t remember what I watched on Netflix last night, these models don’t forget. Their memory is near perfect. That’s when you start to see the asymmetry.

It’s natural, then, that we’re beginning to rely on these tools more every day. But let’s not get carried away. They don’t have decades of lived investment experience. They don’t have our professional networks, judgment built from mistakes, or the subtle behavioral cues that define high-stakes investing. These are precisely the elements human analysts still bring to the table. In fact, research shows that the most effective approach to investment analysis is human + machine. Together, they outperform either alone. (“Phew!”)

But to trust the AI in the loop, we need to build confidence in what the models actually know. Just as you'd review the résumé of a research assistant, it helps to interrogate the models themselves - a process known as meta prompting. That is, prompting the model about its own training. What kind of financial knowledge has it actually internalized? Where is it strong? Where is it thin?

What Financial Market Information Have These Models been Exposed to in Training?

This is the million-dollar question. No model will disclose its training set in full (imagine interviewing a job candidate who refuses to name the courses they’ve studied). Presumably, some of the data is “borrowed” from sensitive or copyrighted sources. But we can get surprisingly close to the truth using meta prompting and cross-verifying answers across models.

Here’s one important caveat: no current model is trained on paywalled material. That means no real-time market data, no Bloomberg terminals, and no sell-side research notes behind a paywall.

ChatGPT-5

Training Cut-off: June 2024

Web access: Enabled via Retrieval-Augmented Generation (RAG) post-cutoff

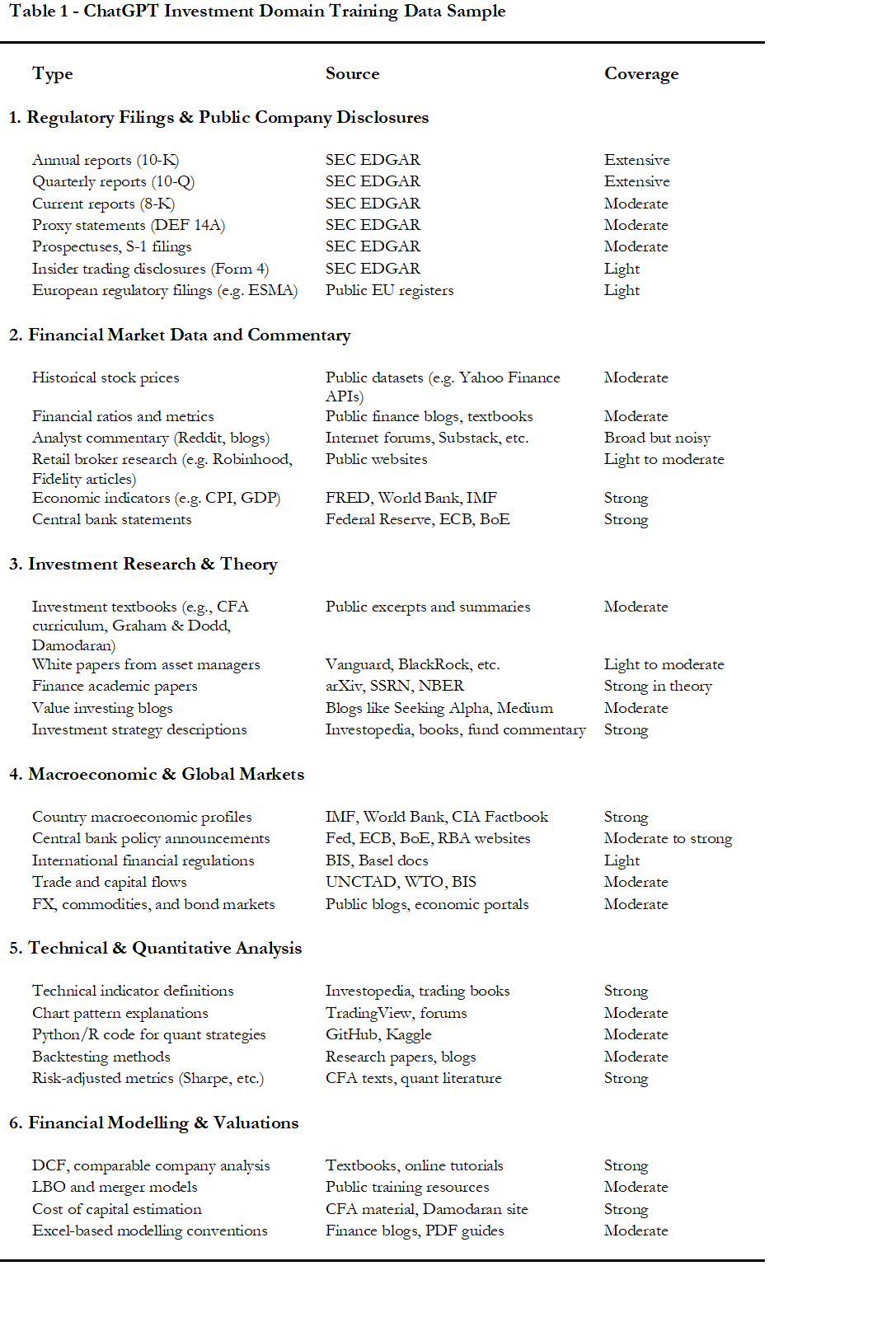

When I asked ChatGPT what financial information it had been trained on that’s relevant to investment analysis, its answer was refreshingly structured - outlining categories, examples, sources, and even grading its coverage from “light” to “extensive.”

Table 1 summarizes this comprehensive list.

Extensively Covered:

SEC filings (10-Ks, 10-Qs), valuation methodologies, economic indicators and central bank communications, finance academic papers, investment strategy styles, country macro profiles, technical/quant analysis, and cost of capital frameworks.Moderately Covered:

Proxy filings, historical price series, financial ratios, finance textbooks, investing blogs, capital flows, FX and commodity markets, bond data, technical chart patterns, back-testing methodologies, LBO/merger models, and Excel modeling conventions.

(Note: "moderate" often reflects the volume of public data available or its relative presence in the training corpus, rather than capability alone.)Lightly Covered:

International (non-US) regulatory frameworks, broker research, and white papers from asset managers (typically gated or proprietary).

Gemini 2.5:

Training Cut-off: June 2024

Post-cutoff Access: Live web search via Google

Gemini shares the same June 2024 training cut-off as ChatGPT-5, but it has a distinct advantage: real-time access to the full breadth of Google Search. This allows it to incorporate context from recent news and data sources — a powerful supplement in dynamic market environments.

When prompted about its investment research capabilities, Gemini rates its training coverage as extensive across multiple core domains:

Fundamental financial data: SEC filings, financial ratios, and investor relations website content

Market data: Historical price series, bid/ask spreads, trading volumes, and index-level data sourced from platforms such as Bloomberg, FactSet, and Reuters (note: not real-time data)

Macroeconomic and geopolitical series

Technical and quantitative indicators

Investment instruments and finance theory textbooks

Peer-reviewed academic papers

It assesses its moderate coverage of equity research reports — a reflection of limitations around paywalled content — and light to moderate exposure to alternative datasets. These include non-traditional sources often used in alpha generation: satellite imagery (e.g., parking lots), credit card data, website traffic metrics, and social media sentiment.

Financial Theory: Influential Thinkers in Gemini’s Training

Gemini also offers insight into the conceptual DNA of its financial understanding. It reports training on key theories including valuation frameworks, modern portfolio theory, the efficient markets hypothesis, behavioral finance, and financial econometrics, heavily shaped by foundational thinkers:

Benjamin Graham: Considered the father of value investing, his seminal works The Intelligent Investor and Security Analysis inform Gemini’s grasp of fundamental valuation and rational investing principles.

Warren Buffett & Charlie Munger: Decades of shareholder letters and public interviews provide a practical, high-signal training corpus on long-term capital allocation and value investing philosophy.

Eugene Fama & Kenneth French: Their factor models, particularly the Fama-French three-factor model, are central to Gemini’s understanding of asset pricing and risk premiums.

Aswath Damodaran: Widely regarded as the “Dean of Valuation,” Damodaran’s books and freely available course materials serve as a key source for practical valuation methodologies.

Harry Markowitz: A cornerstone of Gemini’s portfolio construction logic, Markowitz’s work on diversification and risk management (Modern Portfolio Theory) features heavily in its modeling of tradeoffs and efficient frontiers.

These disclosures offer transparency that’s both reassuring and strategically useful. Gemini’s responses are grounded not only in broad data exposure but also in the intellectual frameworks of the field’s most trusted thinkers. The model’s self-rated “extensive” coverage of market data is worth testing — especially when compared with ChatGPT’s more modest “moderate” rating in this area.

The key takeaway? Different models may excel at different investment tasks. Understanding their training inputs is the first step in using them wisely.

Claude (Sonnet 4)

Training Cut-off: January 2025

Post-cutoff Access: Web retrieval enabled

Claude’s training footprint closely mirrors that of Gemini and ChatGPT, but it includes some specific disclosures that are worth noting.

Claude rates its understanding of alternative investment strategies such as private equity and venture capital as moderate, citing the relative scarcity of publicly available information in these domains. Interestingly, it rates its exposure to ESG and sustainable investing topics as moderate to extensive, aligning with Anthropic’s emphasis on ethical frameworks and socially responsible AI behavior.

However, Claude openly notes its limitations in certain areas. It reports light coverage of:

Proprietary trading strategies and internal methods used by financial institutions

Real-time market data

Private markets transaction details

Complex and specialized derivative instruments

These caveats highlight the inherent opacity of certain investment domains — particularly those shielded by commercial secrecy or regulatory barriers.

Comparing the Models’ Financial Training Coverage

Across ChatGPT, Gemini, and Claude, one thing is clear: all three models have been extensively trained on core finance knowledge. That includes:

Company filings (e.g., 10-Ks, 10-Qs)

Financial statement analysis

Valuation methodologies

Portfolio theory

Quantitative and technical indicators

Investment strategy frameworks

Publicly available financial metrics and academic research

Where the models begin to differentiate meaningfully is in their coverage of market pricing data.

Gemini self-rates its training on price series as extensive

ChatGPT rates itself as moderate

Claude discloses light coverage in this area

However, it’s important to clarify what “coverage” really means in this context. It doesn’t mean the models can recall or reproduce full time series from memory — licensing constraints generally prevent that. Rather, it means the model was exposed to a sufficient volume of price data during training to learn patterns, volatility dynamics, correlation structures, and macro/market relationships.

In this regard, Gemini may be better equipped to handle prompts that involve pattern recognition in price data, while Claude may be more limited in its recall of historical pricing context.

The Limits of Data Recall

Ask any of these models to fetch a full price series - say, “Give me a .csv file of daily OHLC prices for the S&P 500 over the past three years” and you’ll quickly hit the boundaries of their capabilities.

ChatGPT can walk you through where and how to download the data — and may attempt to help but in testing, it only returned a few rows of data.

Gemini will explain the process but defer to third-party sources.

Claude takes a similar approach.

Grok (xAI) once attempted to fabricate a dataset by interpolating daily prices from monthly averages - a creative but inaccurate workaround.

The bottom line: these models are not financial databases. They are reasoning engines, designed to interpret, contextualize, and analyze data - not to store and retrieve it verbatim.

Best practice: always supply the data you want analyzed. Let the models do what they’re best at: Uncovering insights, building narratives, and offering structured outputs.

Investment Report Generation Capabilities

Given their training across a vast array of public financial documents, it’s not surprising that today’s leading models are also capable of replicating common formats of investment reporting.

Models like ChatGPT and Gemini state they can generate:

Equity research reports and public company summaries

Valuation models and summaries

Macroeconomic and sector outlook reports

Quantitative and technical strategy briefs

Personal and institutional investment policy statements

They also support output formatting across presentation layers:

PowerPoint decks

Executive summaries and one-pagers

Excel-based tables and sensitivity analyses

Structured investor memos and client briefings

This makes them well-suited as productivity multipliers — especially for analysts, PMs, and advisors looking to scale the production of high-quality deliverables.

Conclusions

Current generative AI models have been trained on an extraordinarily broad spectrum of publicly available financial information and data - from price series and macroeconomic indicators to company filings, investment strategy breakdowns, valuation methodologies, and regulatory frameworks. Their grasp of finance theory is deep, and their ability to recall and apply it across a range of analytical contexts often rivals that of experienced professionals. These models can replicate the structure and logic of complex financial analyses with remarkable consistency, bounded only by licensing constraints and the data they were exposed to during training.

This depth of training makes them powerful research assistants. For investors, it means faster, deeper insights and in many cases, a technical edge that would be difficult to match manually.

But it’s important to temper that enthusiasm with caution. Like any tool, AI models are only as good as the information they’re given. When data is missing or ambiguous, they may confidently produce flawed outputs - known as “hallucinations.” Do not rely on them to pull price series as the data may be incomplete at best or an outright fabrication in certain circumstances. It remains best practice to provide the models with critical data upon which to analyze.

The takeaway? Use AI to accelerate your thinking, not replace it. Provide models with key facts and figures - just not personal or proprietary data - and always apply your own judgment. These tools are impressively well-read, strong at reasoning, problem solving and pattern matching, but do not replace financial databases.

As always, Inference never stops. Neither should you.

Andy West

The Inferential Investor